We primarily investigate the role of Nested Sampling as a tool to calculate the evidence,

$$ \mathcal{Z} = \int d\theta \mathcal{L} (\theta) \Pi (\theta), $$

Calculating this quantity is indispensable for tasks such as model comparison in a physics context. However this is not generally considered a primary quantity of interest in modern Machine Learning as a more abstract discipline. Our work investigates the vital role this quantity could play in explainable AI.

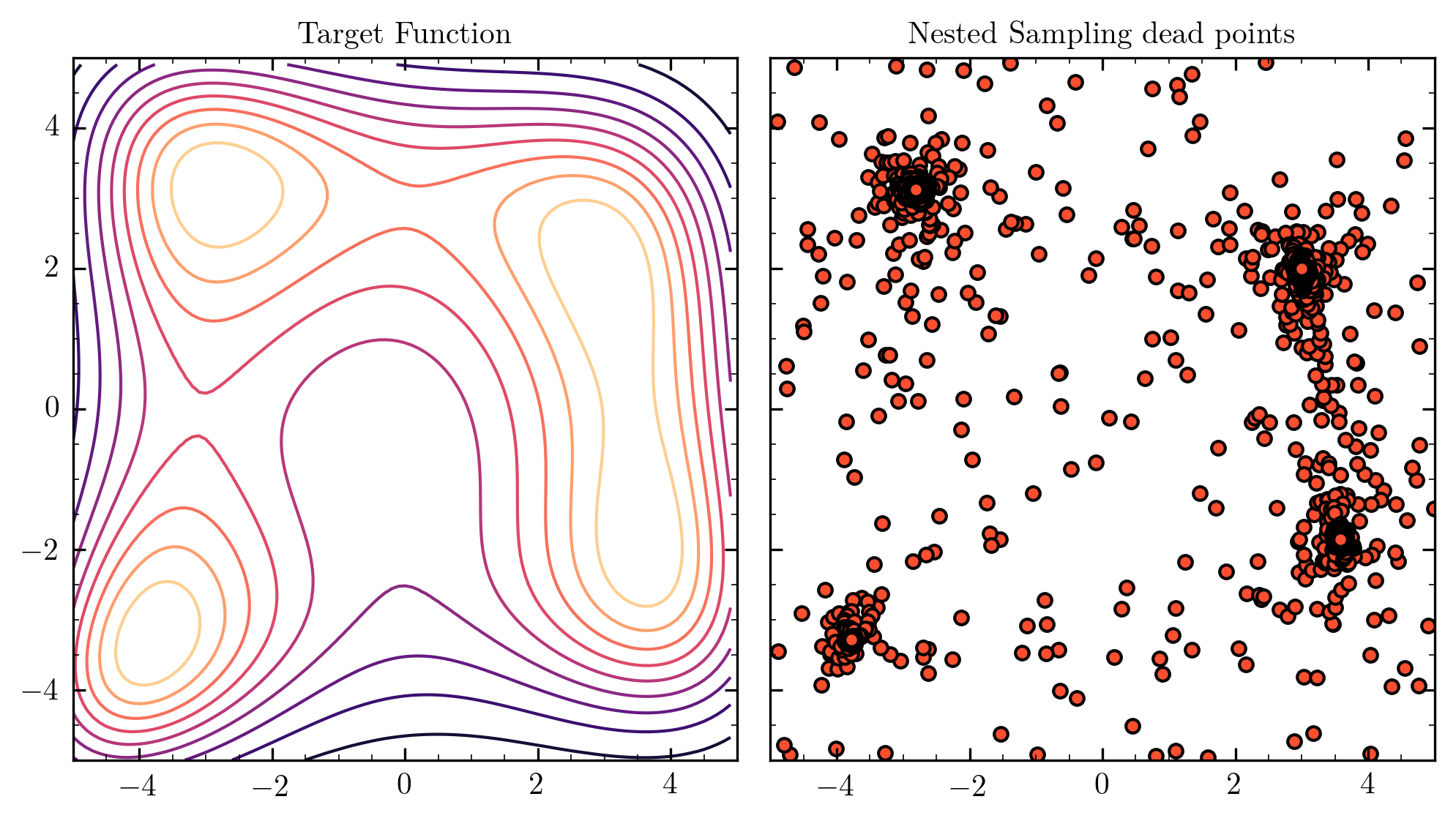

Demonstration of Nested Sampling

Using Nested Sampling, in particular the implementation provided by PolyChord, we can demonstrate the calculation of the evidence integral by sampling over a Himmelblau log likelihood,

Taking the dead points left behind by the algorithm we have an effective sample that is uniquely able to compute multimodal target likelihoods.

Current interests in this field

Gaussian processes dominate thinking in Bayesian Deep learning, are we leaving something important behind by shifting to a pure function space view?

What does the marginal likelihood over parameters in ML models tell us about inference with modern ML? This ongoing program has initial progress documented here

Graph neural networks, or other symmetry exploiting networks, are well suited to sampling paradigms. Can we use parameter space sampling effectively in this context?

If you are interested in discussing any of these topics please get in contact!