This project primarily aims to build new techniques at the frontier of Machine Learning (ML), by using expertise and technology developed in Cambridge for Bayesian inference. Gaussian Processes are a class of ML model that are widely used in both ML/AI for scientific research and across industry, this project will give a good base for a further career involving ML in either setting.

Gaussian Processes

Gaussian Processes are a flexible, generic ML model that can be used for classification or regression tasks. They have gained an popular due to their flexibility, good performance on common ML tasks like time series analysis and importantly have a natural Bayesian interpretation. The evidence for each set of model parameters can be calculated and then optimized, optimization encodes Bayesian Occams razor, naturally punishing overly complicated models and treading the line between overfit and optimized models neatly1.

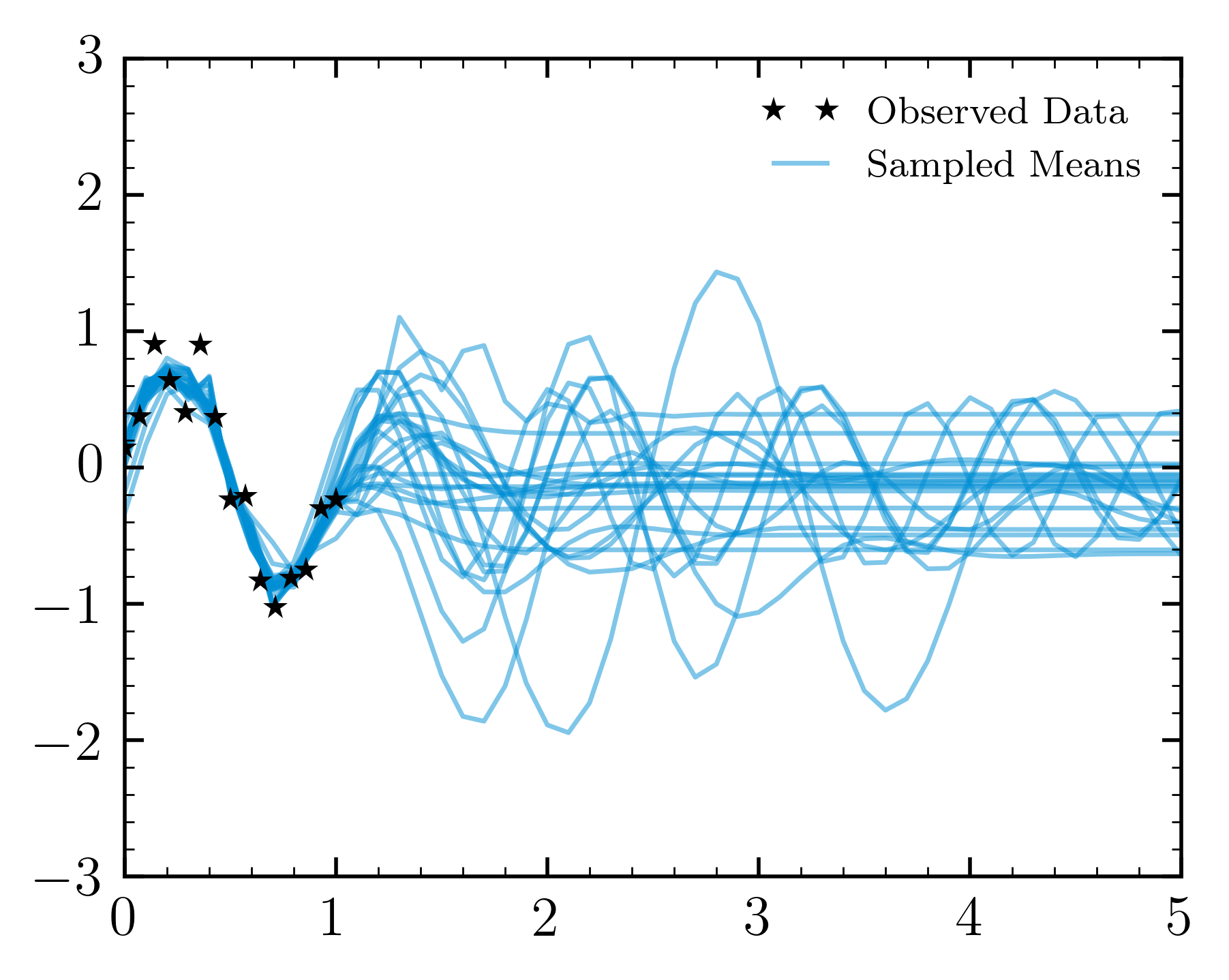

Below is a classic example of some pseudo time series data where different realisations of the model around this optimized point are drawn. This kind of uncertainty quantification is vital for many key industrial and scientific applications of ML

In this project the fundamental nature of how we optimize these sorts of models will be examined. This will build the picture of these probabilistic models from the ground up giving a good introduction to key concepts of Bayesian ML.

Bayesian ML and Marginal Gaussian Process

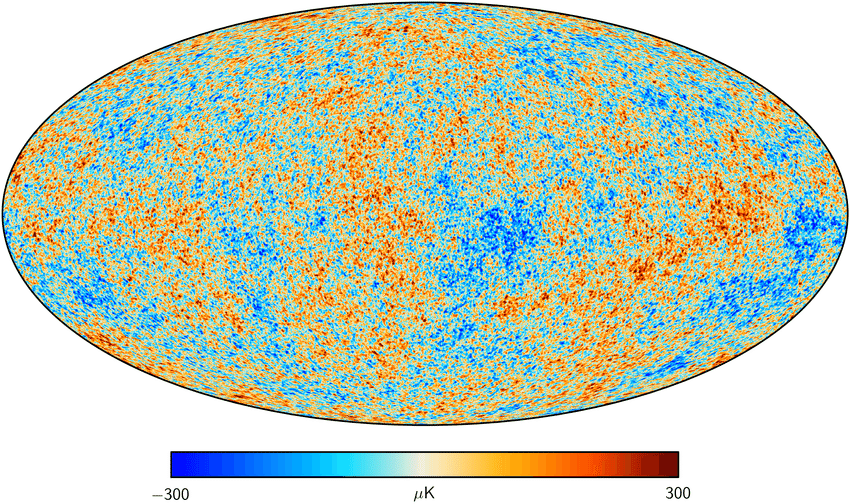

The core idea being explored is to build on recent work from the frontier of machine learning that takes an algorithm developed in this group and applies it to the training of Gaussian Process models2. Nested Sampling, as realised in PolyChord3, was developed in this group to analyse the data from the very early universe and is a key tool for rigorous Bayesian inference. By bringing together this groups expertise in running and interpreting this algorithm with the frontier ML research we aim to answer some core questions at the heart of Bayesian ML.

Application to Astronomy and Cosmology

Astronomy and Cosmology are both disciplines that make extensive use of “time series” style data, a domain GPs are well suited to4. There are a number of well established datasets and problems that are accessible, and we can consider a variety of modelling problems as a set of test cases for the novel Bayesian approach to GPs we will explore. Modelling tensions in early and late time Cosmology5 or modelling time delay of lensed quasars4 can be pulled out as two potential examples.

The proposed marginalisation aims to further refine the uncertainty estimates on the predictions from a Gaussian Process, existing analyses serve as a useful benchmark for this type of new algorithm application. By studying astronomical data we can test if we can shed any new light on the nature of the universe using this approach.

Technical skills and references

- This project will primarily be developing research code in Python

- Familiarity with ML libraries in python a plus e.g. sklearn, torch

- Little knowledge of Astronomy or Cosmology is required, and grounding in Bayesian statistics/ML will be developed through the project

Gaussian Processes for Machine Learning Rasmussen and Williams [book webpage] ↩︎

Marginalised Gaussian Processes with Nested Sampling F. Simpson et al. [2010.16344] ↩︎

PolyChord: next-generation nested sampling W. Handley et al. [1506.00171] ↩︎

Gaussian Process regression for astronomical time-series Suzanne Aigrain, Daniel Foreman-Mackey [2209.08940] ↩︎ ↩︎

Gaussian processes and effective field theory of $f(T)$ gravity under the $H_0$ tension, X. Ren et al. [2203.01926] ↩︎